1. Heisenberg Uncertainty Principle

The Heisenberg Uncertainty principle states that a signal cannot be both highly concentrated in time and highly concentrated in frequency. For example, consider a square-integrable function  normalised so that

normalised so that  . In this case,

. In this case,  defines a probability distributions on the real line. The Fourier isometry shows that its Fourier transform

defines a probability distributions on the real line. The Fourier isometry shows that its Fourier transform  also defines a probability distribution

also defines a probability distribution  . In order to measure how spread-out these two probability distributions are, one can use their variance. The uncertainty principle states that

. In order to measure how spread-out these two probability distributions are, one can use their variance. The uncertainty principle states that

where  designates the variance of the distribution

designates the variance of the distribution  . This shows for example that

. This shows for example that  and

and  cannot be simultaneously less than

cannot be simultaneously less than  .

.

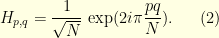

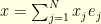

We can play the same with a vector  and its (discrete) Fourier transform

and its (discrete) Fourier transform  . The Fourier matrix

. The Fourier matrix  is defined by

is defined by

where the normalisation coefficient  ensures that

ensures that  . The Fourier inversion formula reads

. The Fourier inversion formula reads  . To measure how spread-out the coefficients of a vector

. To measure how spread-out the coefficients of a vector  are, one can look at the size of its support

are, one can look at the size of its support

![\displaystyle \mu(v) \, := \, \textbf{Card} \Big\{ k \in [1,N]: \; v_k=0 \Big\}. \ \ \ \ \ (3)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Cmu%28v%29+%5C%2C+%3A%3D+%5C%2C+%5Ctextbf%7BCard%7D+%5CBig%5C%7B+k+%5Cin+%5B1%2CN%5D%3A+%5C%3B+v_k%3D0+%5CBig%5C%7D.+%5C+%5C+%5C+%5C+%5C+%283%29&bg=ffffe3&fg=000000&s=0&c=20201002)

If an uncertainty principle holds, one should be able to bound  from below. There is no universal constant

from below. There is no universal constant  such that

such that

for any  and

and  . Indeed, if

. Indeed, if  and

and  one readily checks that

one readily checks that  and

and  so that

so that  . Nevertheless, in this case this gives

. Nevertheless, in this case this gives  . Indeed, a beautiful result of L. Donoho and B. Stark shows that

. Indeed, a beautiful result of L. Donoho and B. Stark shows that

Maybe more surprisingly, and crucial to applications, they even show that this principle can be made robust by taking noise and approximations into account. This is described in the next section.

2. Robust Uncertainty Principle

Consider a subset  of indices and the orthogonal projection

of indices and the orthogonal projection  on

on  . In other words, if

. In other words, if  , then

, then

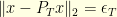

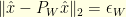

We say that a vector  is

is  -concentrated on

-concentrated on  if

if  . The

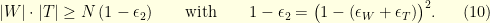

. The  -robust uncertainty principle states that if

-robust uncertainty principle states that if  is

is  concentrated on

concentrated on  and

and  is

is  -concentrated on

-concentrated on  then

then

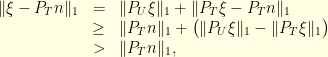

Indeed, the case  gives Equation (5). The proof is surprisingly easy. On introduces the reconstruction operator

gives Equation (5). The proof is surprisingly easy. On introduces the reconstruction operator  defined by

defined by

In words: take a vector, delete the coordinate outside  , take the Fourier transform, delete the coordinate outside

, take the Fourier transform, delete the coordinate outside  , finally take the inverse Fourier transform. The special case

, finally take the inverse Fourier transform. The special case  simply gives

simply gives  . The proof consists in bounding the operator norm of

. The proof consists in bounding the operator norm of  from above and below. The existence of a vector

from above and below. The existence of a vector  that is

that is  -concentrated such that

-concentrated such that  is

is  -concentrated gives a lower bound. In details:

-concentrated gives a lower bound. In details:

In summary, the reconstruction operator  always satisfies

always satisfies  . Moreover, the existence of

. Moreover, the existence of  satisfying

satisfying  and

and  implies the lower bound

implies the lower bound  . Therefore, the sets

. Therefore, the sets  and

and  must verify the uncertainty principle

must verify the uncertainty principle

Notice that we have only use the fact that the entries of the Fourier matrix are bounded in absolute value by  . We could have use for example any other unitary matrix

. We could have use for example any other unitary matrix  . The bound

. The bound  for all

for all  gives the uncertainty principle

gives the uncertainty principle  . In general

. In general  since

since  is an isometry.

is an isometry.

One can work out an upper bound and a lower bound for the reconstruction operator  using the

using the  -norm instead of the

-norm instead of the  -norm. Doing so, one can prove that if

-norm. Doing so, one can prove that if  and

and  then

then

3. Stable  Reconstruction

Reconstruction

Let us see how the uncertainty principle can be used to reconstruct a corrupted signal. For example, consider a discrete signal  corrupted by some noise

corrupted by some noise  . In top of that, let us suppose that on the set

. In top of that, let us suppose that on the set  the receiver does not receive any information. In other words, the receiver can only observe

the receiver does not receive any information. In other words, the receiver can only observe

In general, it is impossible to recover the original  , or an approximation of it, since the data

, or an approximation of it, since the data  for

for  are lost forever. In general, even if the received signal

are lost forever. In general, even if the received signal  is very weak, the deleted data

is very weak, the deleted data  might be huge. Nevertheless, under the assumption that the frequencies of

might be huge. Nevertheless, under the assumption that the frequencies of  are supported by a set

are supported by a set  satisfying

satisfying  , the reconstruction becomes possible. It is not very surprising since the hypothesis

, the reconstruction becomes possible. It is not very surprising since the hypothesis  implies that the signal

implies that the signal  is sufficiently smooth so that the knowledge of

is sufficiently smooth so that the knowledge of  on

on  is enough to construct an approximation of

is enough to construct an approximation of  for

for  . We assume that the set

. We assume that the set  is known to the observer. Since the components on

is known to the observer. Since the components on  are not observed, one can suppose without loss of generality that there is no noise on these components (

are not observed, one can suppose without loss of generality that there is no noise on these components ( we have

we have  for

for  ): the observation

): the observation  can be described as

can be described as

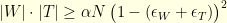

It is possible to have a stable reconstruction of the original signal  if the operator

if the operator  can be inverted: there exists a linear operator

can be inverted: there exists a linear operator  such that

such that  for every

for every  . If this is the case, the reconstruction

. If this is the case, the reconstruction

satisfies  so that

so that  . A sufficient condition for

. A sufficient condition for  to exist is that

to exist is that  for every

for every  . This is equivalent to

. This is equivalent to

Since for  we have

we have  it follows that

it follows that  where

where  is the reconstruction operator. The condition

is the reconstruction operator. The condition  thus follows from the bound

thus follows from the bound  . Consequently the operator

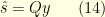

. Consequently the operator  is invertible since

is invertible since  satisfies

satisfies

Since  we have the bound

we have the bound  . Therefore

. Therefore

Notice also that  can easily and quickly be approximated using the expansion

can easily and quickly be approximated using the expansion

Nevertheless, there are two things that are not very satisfying:

- the bound

is extremely restrictive. For example, if

is extremely restrictive. For example, if  of the component are corrupted, this imposes that the signal has only

of the component are corrupted, this imposes that the signal has only  (and not

(and not  ) non-zero Fourier coefficients.

) non-zero Fourier coefficients.

- in general, the sets

and

and  are not known by the receiver.

are not known by the receiver.

The theory of compressed sensing addresses these two questions. More on that in forthcoming posts, hopefully … Emmanuel Candes recently gave extremely interesting lectures on compressed sensing.

4. Exact  Reconstruction: Logan Phenomenon

Reconstruction: Logan Phenomenon

Let us consider a slightly different situation. A small fraction of the components of a signal  are corrupted. The noise is not assumed to be small. More precisely, suppose that one observes

are corrupted. The noise is not assumed to be small. More precisely, suppose that one observes

where  is the set of corrupted components and

is the set of corrupted components and  is an arbitrary noise that can potentially have very high intensity. Moreover, the set

is an arbitrary noise that can potentially have very high intensity. Moreover, the set  is unknown to the receiver. In general, it is indeed impossible to recover the original signal

is unknown to the receiver. In general, it is indeed impossible to recover the original signal  . Surprisingly, if one assumes that the signal

. Surprisingly, if one assumes that the signal  is band-limited

is band-limited  the Fourier transform of

the Fourier transform of  is supported by a known set

is supported by a known set  that satisfies

that satisfies

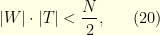

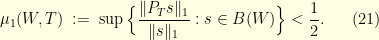

it is possible to recover exactly the original  . The main argument is that the condition (20) implies that the signal

. The main argument is that the condition (20) implies that the signal  has more that

has more that  of its energy on

of its energy on  in the sense that

in the sense that  . Consequently, since

. Consequently, since  is the set where the signal

is the set where the signal  is perfectly known, enough information is available to recover the original signal

is perfectly known, enough information is available to recover the original signal  . To prove the inequality

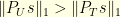

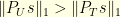

. To prove the inequality  , it suffices to check that

, it suffices to check that

In this case we have  , which prove that

, which prove that  has more that

has more that  of its energy on

of its energy on  . Indeed, the same conclusion holds with the

. Indeed, the same conclusion holds with the  -norm if the condition

-norm if the condition  holds. The magic of the

holds. The magic of the  -norm is that condition

-norm is that condition  implies that the signal

implies that the signal  is solution of the optimisation problem

is solution of the optimisation problem

This would not be true if one were using the  norm instead. This idea was first discovered in a slightly different context in Logan’s thesis (1965). More details here. To prove (22), notice that any

norm instead. This idea was first discovered in a slightly different context in Logan’s thesis (1965). More details here. To prove (22), notice that any  can be written as

can be written as  with

with  . Therefore, since

. Therefore, since  , it suffices to show that

, it suffices to show that

This is equivalent to proving that for any non-zero  we have

we have

It is now that the  -norm is crucial. Indeed, we have

-norm is crucial. Indeed, we have

which gives the conclusion. This would not work for any  -norm with

-norm with  since in general

since in general  : the inequality is in the wrong sense. In summary, as soon as the condition

: the inequality is in the wrong sense. In summary, as soon as the condition  is satisfied, one can recover the original signal by solving the

is satisfied, one can recover the original signal by solving the  minimisation problem (22).

minimisation problem (22).

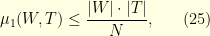

\noindent Because it is not hard to check that in general we always have

this shows that the condition  implies that exact recovery is possible! Nevertheless, the condition

implies that exact recovery is possible! Nevertheless, the condition  is far from satisfying. The inequality is tight but in practice it happens very often that

is far from satisfying. The inequality is tight but in practice it happens very often that  even if

even if  is much bigger that

is much bigger that  .

.

The take away message might be that perfect recovery is often possible if the signal has a sparse representation in an appropriate basis (in the example above, the usual Fourier Basis): the signal can then be recovered by  -minimisation. For this to be possible, the representation basis (here the Fourier basis) must be incoherent with the observation basis in the sense that the coefficients of the change of basis matrix (here, Fourier matrix) must be small

-minimisation. For this to be possible, the representation basis (here the Fourier basis) must be incoherent with the observation basis in the sense that the coefficients of the change of basis matrix (here, Fourier matrix) must be small  if

if  is the observation basis and

is the observation basis and  is the representation basis then

is the representation basis then  must be small for every

must be small for every  . For example, for the Fourier basis we have

. For example, for the Fourier basis we have  for every

for every  .

.

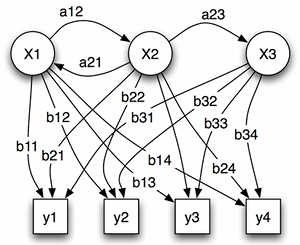

black: observation basis, green:representation basis

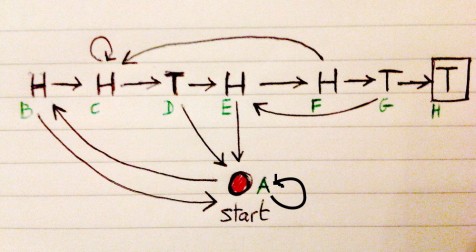

where

is a hidden Markov chain and

is an observation process. The notation

is a shortcut for

. This is usually called a Hidden Markov Model in the literature. If the Markov chain

evolves on in

then the distribution

is a probability on

.

. If

is difficult to sample directly from and that it is difficult to use traditional MCMC methods (because of the dimensionality, multi-modality, poor mixing properties, etc…), one can try to use SMC methods to study the distribution

. To this end, one can introduce a sequence of bridging distributions

is typically very easy to sample from and

. A traditional choice is

where

. The bigger the index

, the more intricate the distribution

is. The problem is that each distribution

has the same dimensionality as the original distribution

so that it is difficult to use all the machinery of SMC methods. For the sake of concreteness, let us suppose that

is a distribution on

. A fundamental remark that is the base of what is now known as Sequential Monte Carlo samplers is that

can be seen as the marginal distribution of the probability

are Markov kernels in the sense that for any

we have

. The distribution

lives on

and one can use the traditional SMC methods to explore the sequence of distributions

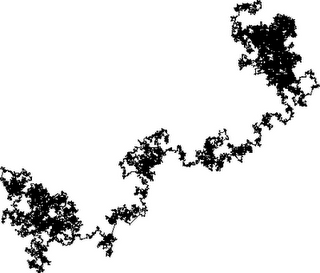

. This algorithm was devised a few years ago by Pierre Del Moral, Arnaud Doucet and Ajay Jasra. It is extremely powerful and several other algorithms can be seen as particular cases of it. Because I had never implemented the algorithm before, I tried yesterday to see how this worked on a very simple example. My target distribution was an equal mixture of seven Gaussian distributions in

with unit covariance matrices and centred at the vertices of a regular heptagon with radius

. I started with

particles at the centre of the heptagon and let them evolved according to a random walk. When the Effective Sampling Size (ESS) was too small (less than

), the whole particle system was regenerated. In order to obtain a sweet movie, I used

bridge distributions

.