Sequential Monte Carlo (SMC) methods are usually designed to deal with probability distributions of increasing dimensions. The canonical example is the sequence of distributions where

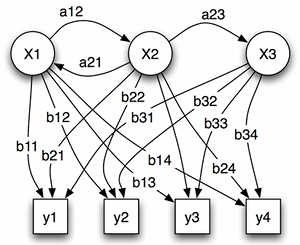

is a hidden Markov chain and

is an observation process. The notation

is a shortcut for

. This is usually called a Hidden Markov Model in the literature. If the Markov chain

evolves on in

then the distribution

is a probability on

.

It turns out that one can use the same kind of ideas to study a single distribution . If

is difficult to sample directly from and that it is difficult to use traditional MCMC methods (because of the dimensionality, multi-modality, poor mixing properties, etc…), one can try to use SMC methods to study the distribution

. To this end, one can introduce a sequence of bridging distributions

where is typically very easy to sample from and

. A traditional choice is

where

. The bigger the index

, the more intricate the distribution

is. The problem is that each distribution

has the same dimensionality as the original distribution

so that it is difficult to use all the machinery of SMC methods. For the sake of concreteness, let us suppose that

is a distribution on

. A fundamental remark that is the base of what is now known as Sequential Monte Carlo samplers is that

can be seen as the marginal distribution of the probability

where are Markov kernels in the sense that for any

we have

. The distribution

lives on

and one can use the traditional SMC methods to explore the sequence of distributions

. This algorithm was devised a few years ago by Pierre Del Moral, Arnaud Doucet and Ajay Jasra. It is extremely powerful and several other algorithms can be seen as particular cases of it. Because I had never implemented the algorithm before, I tried yesterday to see how this worked on a very simple example. My target distribution was an equal mixture of seven Gaussian distributions in

with unit covariance matrices and centred at the vertices of a regular heptagon with radius

. I started with

particles at the centre of the heptagon and let them evolved according to a random walk. When the Effective Sampling Size (ESS) was too small (less than

), the whole particle system was regenerated. In order to obtain a sweet movie, I used

bridge distributions

.

The result is clear and quite impressive. Indeed, this could have been made much more efficient by taking much less bridge distributions. Some recent theory has been developed to analyse the stability of the algorithm in high dimensional situations. The analysis described in the article uses a scaling limit approach very similar to some ideas used to analyse MCMC algorithms and some more recent developements along the same lines that we have done with Andrew and Natesh. Very exciting stuff!

[EDIT: 2 October 2012]

I received several emails asking me to describe how to obtain the short animation showing the evolution of the particles system. The code is available here. This is a quick python script producing a bunch of .png files. It then suffices to stick them together to obtain an animated .gif file. This can for example easily be done using the free (and very good) photoshop-like software GIMP. Open all the png files as different layers. Export the result as a .gif file with the option ‘animated gif’ checked. Voila!

Andy McKenzie said,

October 1, 2012 at 6:48 pm

Very cool. Do you mind sharing how you implemented the algorithm and made that movie?

David C. said,

November 22, 2013 at 9:47 pm

Really great animation there. I am just learning about SMC methods, and it seems the code you posted on CodeViewer has expired. Would you mind sharing it again?

Parallel resampling in the particle filter | Statisfaction said,

May 12, 2014 at 12:33 pm

[…] the bottleneck. In the case of the particle filter (or any sequential Monte Carlo method such as SMC samplers), that bottleneck is the resampling step. The article investigates this issue and numerically […]

Tim Reynolds said,

January 5, 2017 at 10:41 pm

Any chance you can repost that code? I really like the animation.

alek said,

January 6, 2017 at 3:09 am

Hi Tim,

I just found back this very old piece of Python code. It is pretty ugly, but here it is:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

SMC sampler multimodal

hosted with ❤ by GitHub