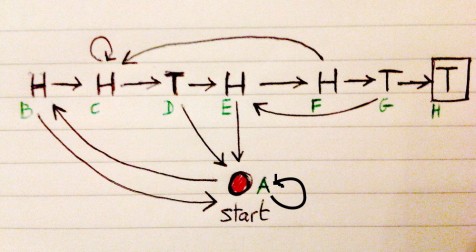

Start flipping a coin. How long should you wait (on average) before observing the pattern !? Easy you might say, that’s simply the hitting time of the state

starting from state

of the following Markov chain.

Indeed, one can solve this kind of problem using a first step analysis. In other words, if is the expected number of steps before reaching

starting from

,

is the expected number of steps before reaching

starting from

,

is the expected number of steps before reaching

starting from

, etc… one can readily see that

verify the system of equations

After a finite amount of time and coffee one then come up with the answer . That was a little bit tedious and I don’t really want to know how long one should wait on average before observing the sequence

.

It turns out that there is a very neat way to approach this kind of problems without solving any linear system. Apparently, this trick was discovered in the 80s by Shuo-Yen Robert Li. As often in probability, this boils down to introducing a clever martingale. Suppose that one wants to know how long it takes on average before observing the magic coin pattern of length

with

for all

. To this end, one will introduce an army of gamblers, a casino, a game of chance and a martingale. The martingale

describes the amount of money earned by the casino. At each round (starting at round

) the casino tosses a coin and the gamblers can make bets. If a gambler starts betting just before round

, he wins if the coin shows

. If he decides to bet again at round

, he wins if the coin shows

, etc… Suppose that there are infinitely many gamblers

and that gambler

starts betting just before round

. The game is fair in the sense that if a gambler bets an amount

, with probability

he gets

and with probability

he losses his bet. One supposes that gambler

starts betting

dollar just before round

and keeps reinvesting his total capital until he either losses everything (total loss equals

dollar) or observes the magic coin pattern

(total earning equals

). Indeed, the amount of money earned by the casino is a martingale and

. For example,

if the first gambler

loses his bet and

if the wins. Consider the time

when the magic coin pattern first appears. This is a stopping time and the optional stopping theorem for martingales shows that

. Indeed, one can also compute this quantity another way. By time

, all the losers have given

dollar to the casino. Also, if the gambler

is still in the game he has won a total amount

. in other words,

where if the gambler

is still in the game after the round

and

otherwise. The fundamental remark is that the quantity

is not random so that

. Indeed, right after round

one can know with certainty whether

is still in the game or not. Gambler

is in the game only if the

last letters of the magic word

are the same as the first

letters. For example, if the magic word is

then the gamblers who have seen the pattern

,

and

at time

are still in the game when the magic word first appear. In this case this shows that

.

For example, this immediately shows that we have to wait on average coin tosses before observing the pattern

.